In part one of this series of blog posts, I made the case for automated testing and introduced the TEAL smart contract we are testing.

To get and set up the example project for this blog post and configure your Algorand Sandbox, please see getting the code in part one.

In part two of this series, we actually get into the automated testing for our smart contract.

Set the faucet account

Besides the setup in part one of this series, there is one more thing we have to do now before we run our tests.

We must set the mnemonic for our “faucet” account that we’ll use to create additional accounts for use by automated tests.

For local testing in the Algorand Sandbox you can simply use the same “creator” account that we used in part one. The faucet account is simply a well-funded account we use to fund our test accounts. Use the instructions from part one to choose an account and export it’s mnemonic, then put that mnemonic in the following environment variable:

export FAUCET_MNEMONIC=<mnemonic_for_the_faucet_account>Under Windows you must use set instead of export, like this:

set FAUCET_MNEMONIC=<mnemonic_for_the_faucet_account>If you are using Hone’s customized Algorand Sandbox mentioned in part one you already have a hardcoded faucet account with the mnemonic specified in the README.md file. In this case there’s no need to shell into the Algod container and find the mnemonic, you can just use the known mnemonic for the hardcoded account.

Run the “actual” tests

After you have setup your sandbox, set the environment variable for your faucet account you are now ready to run the automated tests to exercise our TEAL smart contract.

Invoke the tests like this:

npm run test-actualThis runs our automated tests under Jest, a popular JavaScript testing framework.

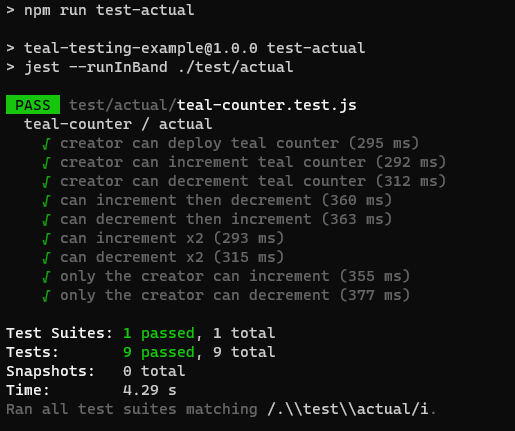

Hopefully you’ll also see that all the tests are passing:

A real test suite

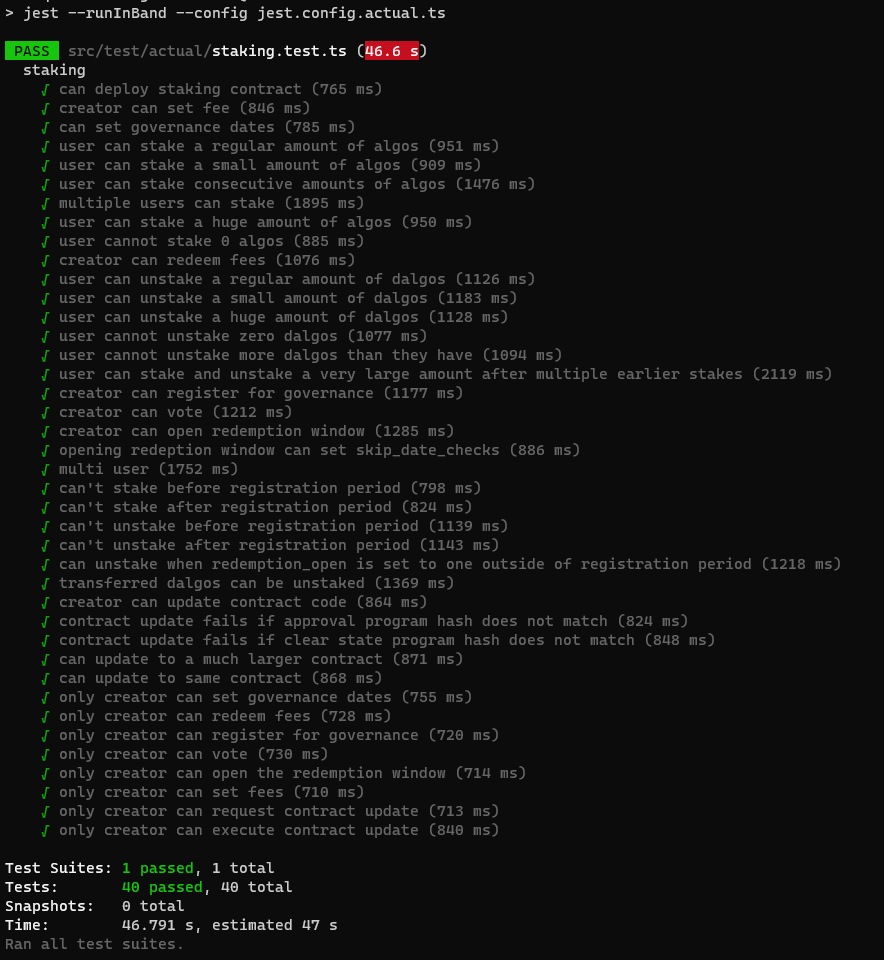

Ok, so the test suite for the teal-counter is pretty trivial. Here’s what a real test suite looks like for a longer and more complex smart contract. This is the output from testing Hone’s liquidity staking contract:

An example test

To understand how the teal-counter tests function, we’ll consider just one of the tests.

The following JavaScript code (an extract from teal-counter-test.js) tests the increment “method” of the TEAL smart contract and verifies that it does actually increment the global state variable:

test("creator can increment teal counter", async () => {

const initialValue = 15;

const { appId, creatorAccount } =

await creatorDeploysCounter(initialValue);

const { txnId, confirmedRound } =

await creatorIncrementsCounter();

await expectGlobalState({

counterValue: {

uint: initialValue + 1,

},

});

await expectTransaction(confirmedRound, txnId, {

txn: {

apaa: [ "increment" ],

apid: appId,

snd: creatorAccount.addr,

type: "appl",

},

});

});Let’s break it down. The first thing we do in each test is deploy the smart contract to the sandbox:

const initialValue = 15;

const { appId, creatorAccount } =

await creatorDeploysCounter(initialValue);Next, the most important thing, we invoke the “method” we’d like to test. In this case we are invoking the increment method of the smart contact:

const { txnId, confirmedRound } =

await creatorIncrementsCounter();If that call to creatorIncrementsCounter succeeds then we know that this method of the contract has executed successfully in this case. This is an implicit vote of confidence that our TEAL code is working ok, but we can be more explicit than that, so the next thing we do is check that our contract has actually incremented the counter:

await expectGlobalState({

counterValue: {

uint: initialValue + 1,

},

});If that code passes, it means the contract successfully incremented the counter. We have verified that counterValue is one more than its original value.

Just for good measure and increased confidence we also check that the appropriate transaction has indeed been committed to the blockchain:

await expectTransaction(confirmedRound, txnId, {

txn: {

apaa: [ "increment" ],

apid: appId,

snd: creatorAccount.addr,

type: "appl",

},

});I’m sure you are wondering how all these functions work:

- creatorDeploysCounter

- creatorIncrementsCounter

- expectGlobalState

- expectTransaction

Reading the code above there is no indication that under the hood we are using the Algorand JavaScript SDK (algosdk) to do the work of deployment, invoking the increment method and then reading global state and finding the actual transaction that was committed to the blockchain in our sandbox. That's because we have abstracted way the underlying use of algosdk and blockchain communication into an easy to use set of helper functions.

You can see the full suite of tests for the teal-counter here:

https://github.com/hone-labs/teal-testing-example/blob/main/test/actual/teal-counter.test.js

A language for testing

What we have done with our helper functions is crafted a meaningful language for our testing. This language is specific to this particular product (in the teal-counter) and is implemented through helper functions like creatorDeploysCounter, creatorIncrementsCounter and creatorDecrementsCounter. These functions provide a product-specific abstraction over algosdk and make it much easier for us to write automated tests.

Using meaningful names for these functions helps us create readable tests. Readable tests give us a helping hand to mentally check the logic that we are supposed to be testing.

We build out our “testing language” while implementing our automated tests and we can start to reuse our increasing vocabulary to create new tests that are organized from different combinations of our testing language. This makes for very flexible testing and helps us to create new types of tests, say by doing different combinations of operations or different orders of operations.

You can see this in some of the later teal-counter tests, like this one where we test that an increment of the counter can be followed immediately by a decrement:

test("can increment then decrement", async () => {

const initialValue = 8;

await creatorDeploysCounter(initialValue);

await creatorIncrementsCounter();

await creatorDecrementsCounter();

await expectGlobalState({

counterValue: {

uint: initialValue, // Back to original value.

},

});

});Being able to recombine the vocabulary of our testing language in different ways make it easier to probe for edge cases in our smart contract. It really helps to find (and squash) well-hidden bugs or loopholes in the code.

If you like, you can think of these helper functions as the software development kit (SDK) for our project. In this case we are looking at the “SDK” for the teal-counter project. You can see all the “SDK” functions for the teal-counter on GitHub.

In fact, for your own product you might even want it to be a real SDK! The “SDK” that we build to support our automated testing can be a very good basis for a real public SDK that we might release to encourage our community to participate in the development process.

Declarative code

The functions expectGlobalState and expectTransaction are not part of the teal-counter “SDK”. These are more general helper functions that belong in their own code module so that we can reuse them for any future Algorand smart contract project.

Using these functions, like expectGlobalState shown again below, exhibits a very declarative style of programming, one where we express how we want “things to look”, but not “how they should be done”:

await expectGlobalState({

counterValue: {

uint: initialValue + 1,

},

});Declarative coding makes for more readable and expressive test code. Our code is not cluttered up with the details of blockchain communication, if-statements and exceptions.

This style of code is a very concise and understandable description of how things should look at this moment in time. And if things aren’t the way we expect, in this case expectGlobalState will figure it out and throw an exception that fails our test, so we get to know immediately when there’s a problem in our code.

Finding a transaction

The function expectTransaction is worth mentioning in its own section because it involves some non-trivial code to actually find the transaction that we just committed to the blockchain.

Before expectTransaction can verify the contents of a transaction for us it must be able to retrieve that transaction from the blockchain. To do that it calls down to findTransaction which is presented below:

async function findTransaction(algodClient, roundNumber, txnId) {

const block = await algodClient.block(roundNumber).do();

for (const stxn of block.block.txns) {

if (computeTransactionId(

block.block.gh,

block.block.gen,

stxn) === txnId) {

return stxn;

}

}

return undefined;

}When we know the id of the transaction and the round number of the block where it was committed, we can search that particular block to find the transaction.

We know the id of the transaction we are seeking and we must compare that against the id for each transaction in the block. The problem is: how do we find the id for each transaction in the block?

It’s not obvious at all how to do this and there appears to be no function in algosdk that will compute a transaction id for us. My heartfelt thanks go out to Benjamin Guidarelli for helping me create the function computeTransactionId that is presented below.

I honestly don’t know how Ben figured this one out, but I’m very happy that he did and that I can now share it with you:

function computeTransactionId(gh, gen, stib) {

const t = stib.txn;

// Manually add gh/gen to construct

// a correct transaction object

t.gh = gh;

t.gen = gen;

const stxn = {

txn: algosdk.Transaction.from_obj_for_encoding(t),

};

if ("sig" in stib) {

stxn.sig = stib.sig;

}

if ("lsig" in stib) {

stxn.lsig = stib.lsig;

}

if ("msig" in stib) {

stxn.msig = stib.msig;

}

if ("sgnr" in stib) {

stxn.sgnr = stib.sgnr;

}

return stxn.txn.txID();

}You can find the full code for computeTransactionId and findTransaction on GitHub.

A deployment for each and every test

You probably noticed earlier that we are deploying the smart contract for each and every test. You might reasonably be a little shocked at this and be thinking, doesn’t this hurt the performance of our tests!

That’s a fair question. Even though I know how to get good performance from these tests (more on that in the next section) I have to be honest and say that performance really isn’t my priority here.

First and foremost, I want accurate, reliable and deterministic tests. When our tests overlap in the same space (e.g. working on the same data) they can interfere with each other and this can cause test failures that are effectively false negatives: unnecessary and usually counterproductive failures in our test pipeline. If this causes problems for us, then we will waste our time debugging problems that are simply artifacts of our testing process and not real issues. I don’t like wasting my time. For the best possible chance at having reliable tests we must do our utmost to isolate our tests from each other.

To keep our tests from interfering with each other, we force each of them to operate in their own “virtual space” in the sandbox. This is achieved by creating isolated test accounts (using the function createFundedAccount) and then doing separate deployments of the smart contract for every test. Each test is run against a separate instance of the smart contract that has been created by a different “creator” account.

If this seems extreme, just know that I would have liked to take this even further. I can imagine allocating a complete ephemeral/temporary sandbox for each test, so that each test runs in its own fresh sandbox instance. I can’t do this because the Algorand Sandbox is actually kind of hefty and if I tried to run numerous instances of it, then I think I really would have a detrimental performance problem on my hands.

“Dev mode” vs “normal mode“

We already touched on “dev mode” in part one. It’s worth revisiting here because enabling dev mode for our sandbox makes our automated tests run much more quickly.

We should use dev mode for our frequent testing while we are coding. Our testing pipeline completes fairly quickly, meaning that we can run it repeatedly as we are coding our smart contract in TEAL and writing tests in JavaScript.

However, it’s also worthwhile testing in “normal mode” because this is more closely reflective of a real Algorand blockchain node and so we can get a better idea of how our smart contract will actually perform in the real world.

Unfortunately normal mode is much slower than dev mode, but we can get the best of both worlds by testing regularly in both normal and dev mode. We should run our tests frequently against a dev mode sandbox, many times during our working day, but then only run our tests against a normal mode sandbox, say once per day, or maybe once every couple of days.

Of course we can automate all this testing in the cloud as well! At Hone we use GitHub Actions to automatically run our tests against our production Algorand sandbox running on Kubernetes whenever code is pushed. But we are getting ahead of ourselves here, so let’s save talk of continuous integration for TEAL smart contracts for another day.

Conclusion

This has been part two of a series of blog posts on automated testing for Algorand smart contracts.

In this post we actually ran automated tests against our Algorand Sandbox. Our code verified that the transactions executed successfully and that they impacted the blockchain in the way we expected.

In part three of this series we’ll take our testing into defensive territory: aggressively attacking our code through automated tests to find edge cases and prove that our code can gracefully handle accidental or malicious abuse.

We’ll also talk about how we can remove the Algorand Sandbox from our testing, learning how to evaluate our TEAL code on a simulated Algorand virtual machine (AVM) so that we have simulated tests that run vastly more quickly than the actual tests we’ve run in this post.

About the author

Ashley Davis is a software craftsman and author. He is VP of Engineering at Hone and currently writing Rapid Fullstack Development and the 2nd Edition of Bootstrapping Microservices.

Follow Ashley on Twitter for updates.

About Hone

Hone solves the capital inefficiencies present in the current Algorand Governance model where participating ALGO must remain in a user’s account to be eligible for rewards, eliminating the possibility for it to be used as collateral in Algorand DeFi platforms or as a means to transfer value on the Algorand network. With Hone, users can capture governance-reward yield while using their principal–and the yield generated– by depositing ALGO into Hone and receiving a tokenized derivative called dALGO. They can then take dALGO and use it as collateral in DeFi or simply transfer value on-chain without sacrificing governance rewards.